Permutation-based post hoc inference for two-group differential gene expression studies: RNAseq data

Nicolas Enjalbert Courrech and Pierre Neuvial

2025-07-25

Source:vignettes/post-hoc_differential-expression_RNAseq.Rmd

post-hoc_differential-expression_RNAseq.RmdThis vignette illustrates the relevance of permutation-based post hoc bounds on false positives for the differential analysis of (bulk) RNA sequencing (RNAseq) data. We illustrate how the post hoc methods introduced by Blanchard, Neuvial, and Roquain (2020) may be used to

- build confidence curves (envelopes) for the true or false positives and define differentially expressed genes accordingly

- perform statistical inference on the output of volcano plots

The methods described in this vignette have been introduced in the paper Blanchard, Neuvial, and Roquain (2020) and in the book chapter Blanchard, Neuvial, and Roquain (2021). A shiny application for volcano plots is also available at https://shiny-iidea-sanssouci.apps.math.cnrs.fr/.

require("ggplot2") || install.packages("ggplot2")

require("sanssouci") || remotes::install_github("sanssouci-org/sanssouci@develop")Set the seed of the random number generator for numerical reproducibility of the results:

set.seed(20200924)Motivation: a differential gene expression study

We focus on differential gene expression studies in cancerology. These studies aim at identifying genes whose mean expression level differs significantly between two (or more) populations, based on a sample of gene expression measurements from individuals from these populations.

data("RNAseq_blca", package = "sanssouci.data")

Y <- RNAseq_blca

groups <- ifelse(colnames(RNAseq_blca) == "III", 1, 0)

rm(RNAseq_blca)This data set consists of gene expression measurements for patients from the Cancer Genome Atlas Urothelial Bladder Carcinoma (TCGA-BLCA) data collection (Cancer Genome Atlas Research Network et al. (2014)). Following a standard practice in RNAseq data analysis, we convert these measurements to to count per millions (CPM) in order to make them comparable across samples, and filter out genes with very low counts in all samples:

CPM <- Y/colSums(Y)*1e6

# plot(density(rowMaxs(log(1 + CPM))))

ww <- which(rowMaxs(CPM) < 10)

ww <- which(rowQuantiles(log(1 + CPM), prob = 0.75) < log(1+5))

Y <- CPM[-ww, ]

plot(density(log(1 + Y)))

m <- nrow(Y)The patients are classified into two subgoups, according to their clinical stage (II or III):

table(groups)

#> groups

#> 0 1

#> 130 140The goal of this study is to understand the molecular differences at the gene expression level between the stage II and stage III populations. Each patient is characterized by a vector of gene expression values. We aim at addressing the following question:

For which genes is there a difference in the distribution of expression levels between the stage II and stage III populations?

This question can be addressed by performing one statistical test per

gene, and to define “differentially expressed” genes as those passing

some significance threshold. Below, we use the Wilcoxon rank sum (or

Mann-Whitney) test for comparing two independent samples. This is a

rank-based non-parametric test. A fast version of this test is

implemented in the sanssouci::rowWilcoxonTests

function.

To obtain post hoc bounds, we create an object of class

SansSouci and run the fit method to perform

calibration with

permutations. The target risk level is set to

,

meaning that all of the statements made below will be true with

probability greater than

.

alpha <- 0.1

obj <- SansSouci(Y = log(1 + Y), groups = groups)

res <- fit(obj, B = 1000, alpha = alpha, family = "Simes",

rowTestFUN = rowWilcoxonTests)For comparison purposes we also run the (parametric) Simes method introduced by Goeman and Solari (2011):

Confidence curves for “top-” lists

In the absence of prior information on genes, a natural idea is to rank them by decreasing statistical significance, and a natural question to ask is:

Can we provide a lower confidence curve on the number (or proportion) of truly differentially expressed genes among the most significant genes?

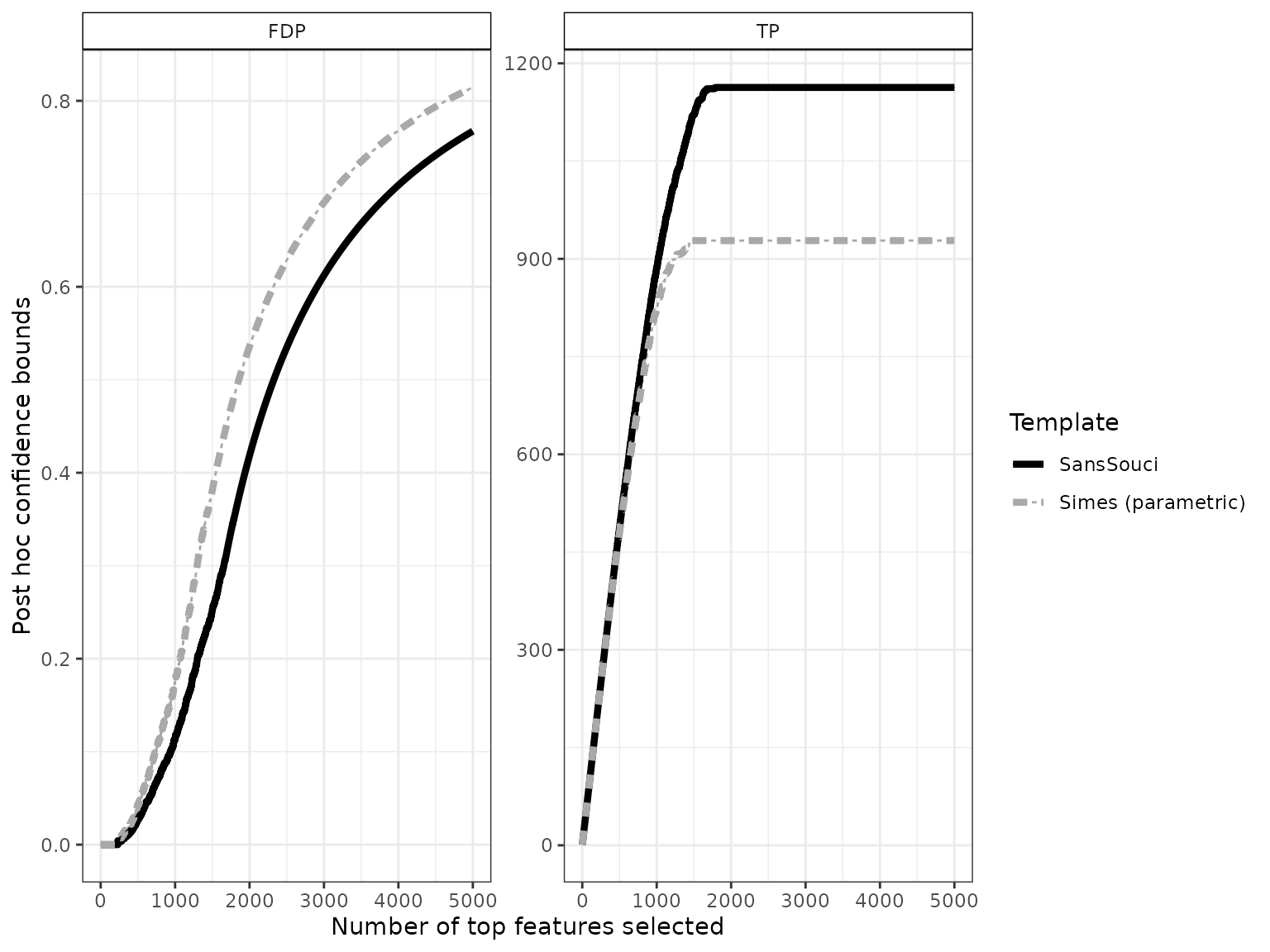

The confidence curve obtained by calibration is the solid black line in the figure below:

conf_bounds <- lapply(resList, predict, all = TRUE)

cols <- c("black", "darkgray")

p <- plotConfCurve(conf_bounds, xmax = 5000, cols = cols, legend.title = "Template") +

geom_line(size = 1.5)

#> Warning: Using `size` aesthetic for lines was deprecated in ggplot2 3.4.0.

#> ℹ Please use `linewidth` instead.

#> This warning is displayed once every 8 hours.

#> Call `lifecycle::last_lifecycle_warnings()` to see where this warning was

#> generated.

p

# ggsave(p, file = "conf-curve.pdf", width = 6, height = 4)This plot can be interpreted as follows: among the 2000 most significant genes, the number of truly differentially expressed genes is at least 1163 (right panel). Equivalently, the FDP among these 2000 genes is at most 0.42 (left panel).

The dashed gray curve is obtained by the parametric Simes method introduced by Goeman and Solari (2011). The comparison between the two curves illustrates the gain in power obtained by using permutations methods to adapt to the dependence between genes. In this example, the parametric Simes method gives the following guarantees: among the 2000 most significant genes, the number of truly differentially expressed genes is at least 928 (right panel). Equivalently, the FDP among these 2000 genes is at most 0.54 (left panel).

Differentially expressed genes

In this section we show how to the above curves may be used to address the question:

Which genes are differentially expressed with high probability?

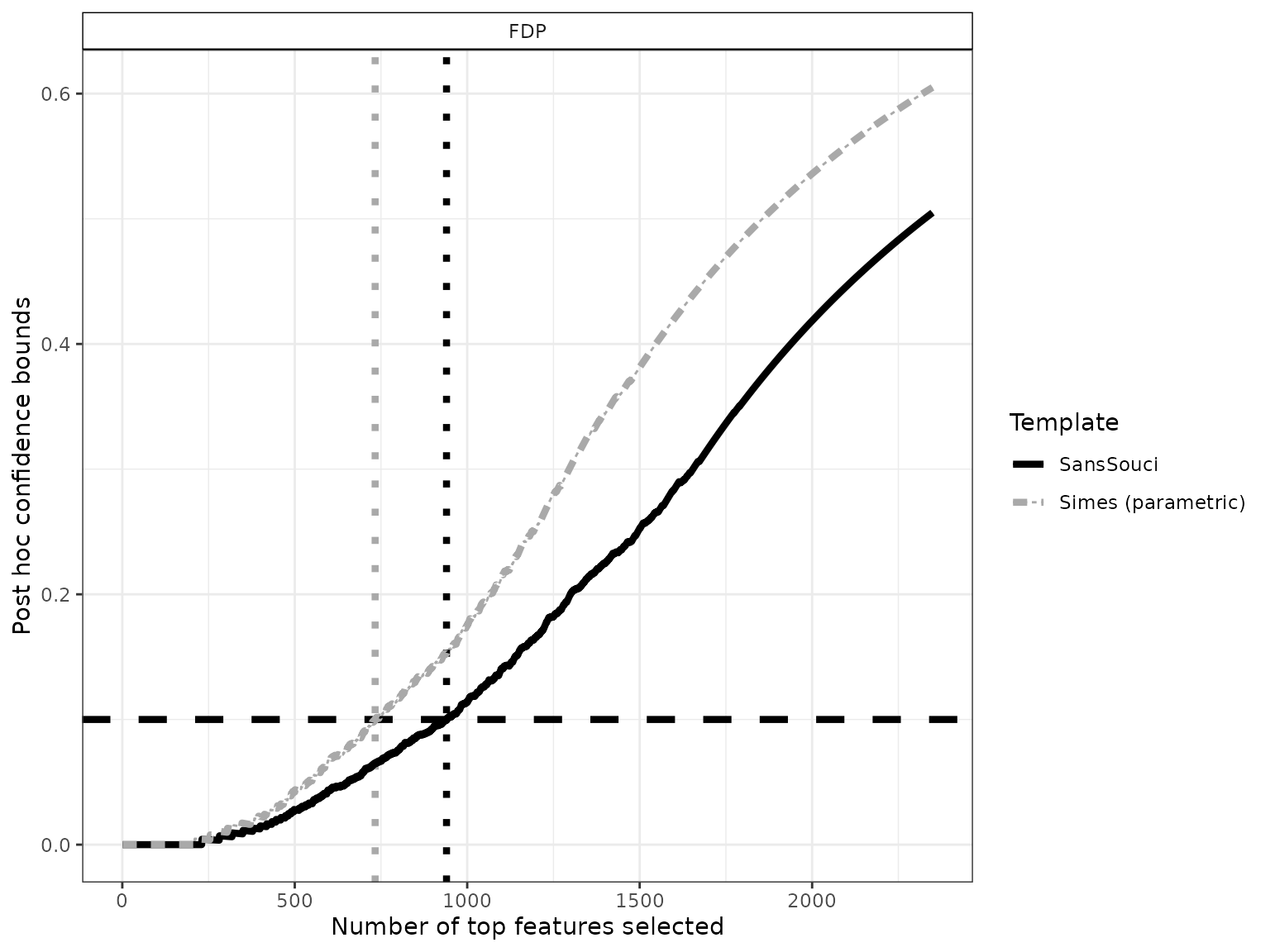

To do so, we define differentially expressed genes as the largest set of genes for which the FDP bound is less than a user-given value, for example . This corresponds to drawing a horizontal line in the preceding plot:

q <- 0.1 # FDP budget (user-defined)

FDP <- lapply(resList, predict, what = "FDP", all = TRUE)

n_DEG <- sapply(FDP, function(x) sum(x <= q))

size <- 1.5

p <- plotConfCurve(FDP, xmax = 2.5*n_DEG, col = cols, legend.title = "Template") +

geom_hline(yintercept = q, linetype = "dashed", size = size) +

geom_vline(xintercept = n_DEG, linetype = "dotted", col = cols, size = size) +

geom_line(size = size)

p

# ggsave(p, file = "conf-curve_DEG.pdf", width = 6, height = 4)Using , we obtain 940 differentially expressed genes. Note that this gene list has a clear statistical interpretation: with probability , the proportion of false positives (that is, genes that are called DE by mistake) is less than .

The above example also illustrates the increase in power obtained by calibration, since the parametric Simes method yields a subset of “only” 733 genes called differentially expressed (with identical statistical guarantees).

Comparison to FDR control

We argue that ensuring with high probability that the FDP is less than some user-defined budget, as done above, is more interpretable than the widely used False Discovery Rate (FDR) control introduced by Benjamini and Hochberg (1995). Indeed, FDR control guarantees that the expected (or average) FDP among DE genes is less than some user-defined FDR budget. However, the true FDP may well be far from its expected value, especially since the variability of the FDP is known to increase with the degree of dependence between genes.

adjp <- p.adjust(pValues(res), method = "BH")

FDR_level <- 0.05

n_BH <- sum(adjp < FDR_level)

n_BH

#> [1] 1713In our case, the BH procedure would consider 1713 genes as differentially expressed at the FDR level 0.05. This set of DE genes is larger than the 940 genes called DE by our method, but the latter comes with stronger and more interpretable statistical guarantees than FDR control.

Volcano plots

A classical practice in gene expression studies is to define DE genes as those passing both a significance filter (small -value) and an effect size or “fold change” filter. Here, the fold change of a gene is defined as the difference between the expression medians (on the log scale) of the two groups compared. This double selection by -value and fold change corresponds to two sets of genes, with positive/negative fold change, which can be represented in the following plot:

volcanoPlot(res, p = 1e-4, r = 0.5)

This type of plot is called a “volcano plot” Cui and Churchill (2003). Post hoc inference makes it possible to obtain statistical guarantees on selections such as the ones represented in the above figure.

Custom statistics: example using limma-voom

Post hoc bounds can be calculated for any gene selection. In

particular, even if Wilcoxon tests have been performed for the

calibration of the post hoc bounds, it is possible to rely on other

statistics to select genes of interest. In this section, we

illustrate this idea by making a volcano plot based on the

-values

and log-fold changes obtained from the limma-voom method of Law et al. (2014) implemented in the

limma package (Ritchie et al.

(2015)). The next code chunk is adapted from the vignette of the

limma package:

library(limma)

library(edgeR)

d <- DGEList(Y)

#> Repeated column names found in count matrix

d <- calcNormFactors(d)

Grp <- as.factor(groups)

mm <- model.matrix(~0 + Grp)

y <- voom(d, mm, plot = FALSE)

res_lm <- lmFit(y, mm)

contr <- makeContrasts(Grp1 - Grp0, levels = colnames(coef(res_lm)))

res_fit <- contrasts.fit(res_lm, contr)

res_eb <- eBayes(res_fit)

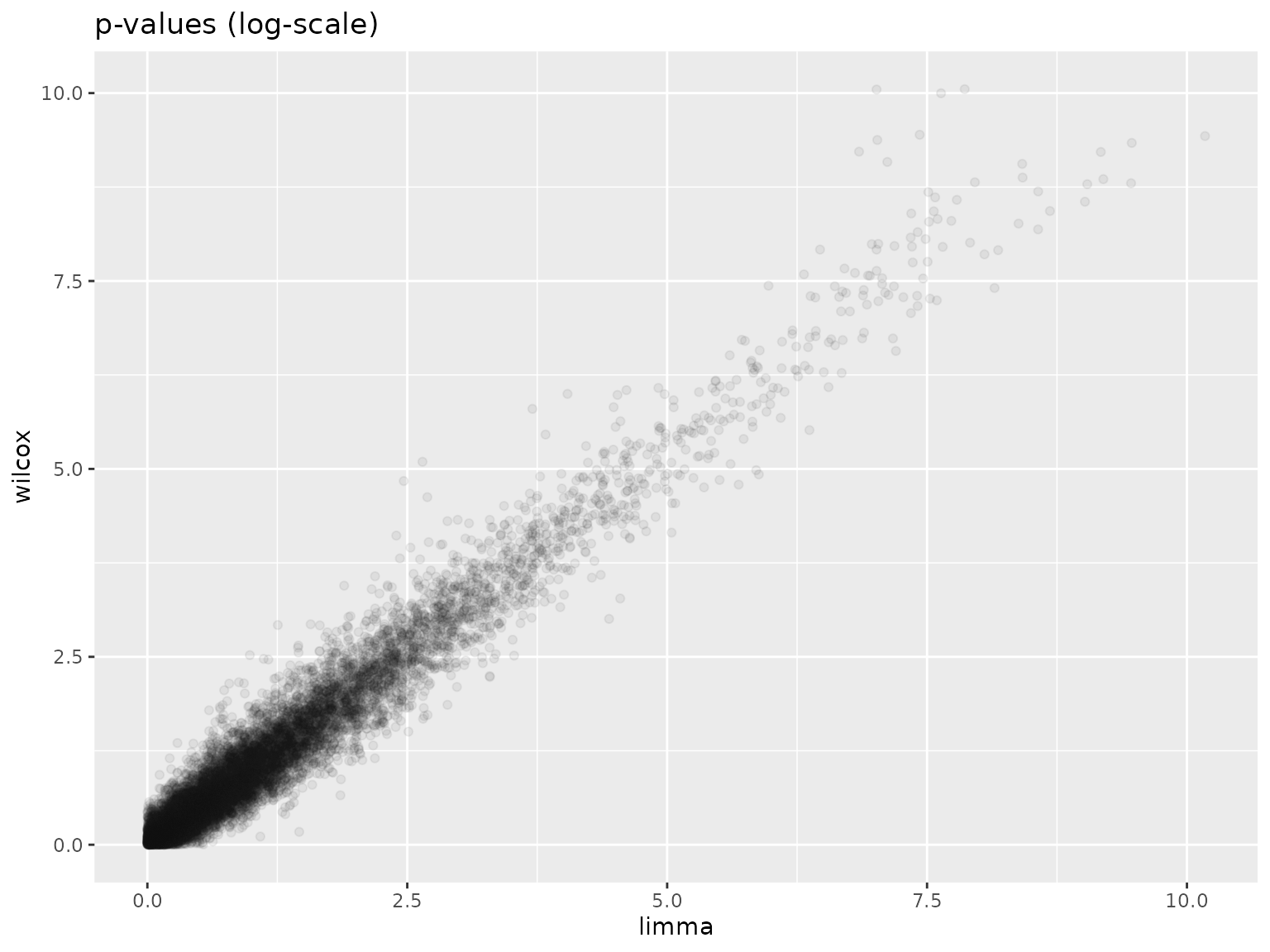

TT <- topTable(res_eb, sort.by = "none", number = Inf)The next plot suggests that the -values obtained from limma-voom and from Wilcoxon tests are consistent.

df <- data.frame(wilcox = -log10(as.vector(pValues(res))), limma = -log10(TT$P.Value))

ggplot(df, aes(x = limma, y = wilcox)) +

geom_point(color = "#10101010") +

ggtitle("p-values (log-scale)")

Finally we obtain the volcano plot based on limma statistics.

volcanoPlot(res,

fold_changes = TT$logFC,

p_values = TT$P.Value,

p = 1e-4, r = 0.5)

Session information

sessionInfo()

#> R version 4.5.1 (2025-06-13)

#> Platform: x86_64-pc-linux-gnu

#> Running under: Ubuntu 24.04.2 LTS

#>

#> Matrix products: default

#> BLAS: /usr/lib/x86_64-linux-gnu/openblas-pthread/libblas.so.3

#> LAPACK: /usr/lib/x86_64-linux-gnu/openblas-pthread/libopenblasp-r0.3.26.so; LAPACK version 3.12.0

#>

#> locale:

#> [1] LC_CTYPE=C.UTF-8 LC_NUMERIC=C LC_TIME=C.UTF-8

#> [4] LC_COLLATE=C.UTF-8 LC_MONETARY=C.UTF-8 LC_MESSAGES=C.UTF-8

#> [7] LC_PAPER=C.UTF-8 LC_NAME=C LC_ADDRESS=C

#> [10] LC_TELEPHONE=C LC_MEASUREMENT=C.UTF-8 LC_IDENTIFICATION=C

#>

#> time zone: UTC

#> tzcode source: system (glibc)

#>

#> attached base packages:

#> [1] stats graphics grDevices utils datasets methods base

#>

#> other attached packages:

#> [1] edgeR_4.6.3 limma_3.64.1 matrixStats_1.5.0 sanssouci_0.16.0

#> [5] ggplot2_3.5.2

#>

#> loaded via a namespace (and not attached):

#> [1] Matrix_1.7-3 gtable_0.3.6 jsonlite_2.0.0 compiler_4.5.1

#> [5] crayon_1.5.3 jquerylib_0.1.4 systemfonts_1.2.3 scales_1.4.0

#> [9] textshaping_1.0.1 statmod_1.5.0 yaml_2.3.10 fastmap_1.2.0

#> [13] lattice_0.22-7 R6_2.6.1 labeling_0.4.3 generics_0.1.4

#> [17] knitr_1.50 tibble_3.3.0 desc_1.4.3 bslib_0.9.0

#> [21] pillar_1.11.0 RColorBrewer_1.1-3 rlang_1.1.6 cachem_1.1.0

#> [25] xfun_0.52 fs_1.6.6 sass_0.4.10 cli_3.6.5

#> [29] pkgdown_2.1.3 withr_3.0.2 magrittr_2.0.3 matrixTests_0.2.3

#> [33] locfit_1.5-9.12 digest_0.6.37 grid_4.5.1 lifecycle_1.0.4

#> [37] vctrs_0.6.5 evaluate_1.0.4 glue_1.8.0 farver_2.1.2

#> [41] codetools_0.2-20 ragg_1.4.0 rmarkdown_2.29 tools_4.5.1

#> [45] pkgconfig_2.0.3 htmltools_0.5.8.1References

& Hall/CRC. https://hal.archives-ouvertes.fr/hal-02320543.